This is a post I hoped I wouldn’t have to write. After watching for over two weeks as InfoWorld misrepresented the particulars of my resignation as Enterprise Desktop blogger, I feel I’ve given the publication ample opportunity to come clean and admit they were complicit in the Craig Barth ruse.

Since it’s now clear that no such admission is forthcoming, I feel I have no choice but to release the following email exchange which demonstrates, without question, that Infoworld Executive Editor Galen Gruman knew of my ownership of the exo.blog; that he knew I was using it as source material for my InfoWorld blog; and that Gregg Keizer was regularly quoting from the exo.blog and its sole public “representative,” Craig Barth. Moreover, it shows that Galen was willing to assist me in promoting the very same exo.blog content, even going so far as offering to use InfoWorld staff writers to create news copy based on my numbers.

And lest he somehow try to escape scrutiny by passing all blame down to his subordinate, I’ve also included an email from InfoWorld Editor in Chief Eric Knorr (i.e. the guy who “fired” me with much sanctimonious aplomb) which shows that he, too, was clearly aware of my ownership of all sites, content and materials associated with the exo.peformance.network.

Note: Conspiracy theorists, please scroll to the bottom of this post for the prerequisite SMTP header disclosure.

But first, I need to set-up the timeline:

The following exchange took place roughly one week before the infamous ComputerWorld Windows 7 memory article. I was pushing Galen to let me run another story in InfoWorld that would reference the exo.blog data on browser market share, and he replied by pointing out that I had done something similar just a few weeks earlier, with Gregg Keizer quoting from my exo.blog. He also spoke of assigning staff writer Paul Krill to begin quoting from my exo data as part of a new regular news component for InfoWorld.

I responded that I had no problem with him having Paul Krill write up my usage data, but I requested that he approach it the same way that Gregg [Keizer] had in the article Galen had referenced earlier and thus not use my name but instead refer to xpnet.com as a separate entity. I close the paragraph by emphasizing how I was determined to curry favor with every beat writer I could find, including Gregg Keizer, and how there would be no real overlap between the data I publish in my blog (i.e. exo.blog) and what I did for InfoWorld.

A couple of items to note:

- At no time prior to the “scandal” breaking did I, Randall C. Kennedy, publish any research articles through the exo.blog. All articles were published through the generic “Research Staff” account and subsequently fronted to the media by the fictitious Craig Barth.

- Galen Gruman clearly acknowledges my ownership of both the exo.performance.network and the exo.blog, and notes how he saw my data being referenced in ComputerWorld. Yet at no time did I, Randall C. Kennedy, ever represent the exo.blog, xpnet.com or Devil Mountain Software, Inc., to the media outside of InfoWorld, nor was I ever directly quoted as such in any of Gregg Keizer’s articles or articles from other authors or publications.

So, in the Gregg Keizer-authored article that he acknowledges reading, Galen would have seen copious references to a “Craig Barth, CTO.” And yet he makes no mention of this contradiction. Nor does he take issue with my repeated claims to be the owner of the exo blog and also the person seeking to get Gregg Keizer and others to quote me (in the guise of Craig Barth) and exo.blog’s content.

Needless to say, this is damning to InfoWorld. Not only does it show that members of their senior executive staff were aware of the ruse, they were also actively working with me to take advantage of the situation, to the benefit of both parties: Me, by allowing me to quote from the Craig Barth-fronted exo.blog’s content in my Enterprise Desktop blog; and InfoWorld, by assigning a staff writer to create original InfoWorld content based on the exo.performance.network’s data and conclusions.

Important: I am not seeking absolution here. I accept that what I did crossed the line, no matter how benign my intentions. Rather, what I am doing here is exposing the lie behind InfoWorld’s high-handed dismissal of their leading blogger because, as they claim, he “lied to them.” On the contrary, InfoWorld was in on the ruse from the earliest stages, and the fact that they looked the other way – and then tried to cover their exposure by hanging me out to dry – speaks volumes about the “ethics” and “integrity” of InfoWorld as a publication and of IDG as a whole.

So, without further ado, here is the email exchange that InfoWorld wishes never took place. The following excerpts were taken directly from the last reply in a series of four emails that passed between Galen Gruman and myself. I’ve highlighted the most relevant bits in red so that they stand out from the bulk of the email text. I’ve also re-ordered the original messages and replies so that they proceed, from the top down, in chronological order vs. the normal bottom-up threading applied by Outlook.

Finally, I’ve added breaks/numbered headers identifying each stage of the conversation. You can see the original, unformatted contents of the email thread by clicking this link.

Note: The SMTP header is included at the bottom of this post showing the date, time and mail delivery path taken by the 2nd Reply From Galen message from which the various thread sections below were excerpted.

1. The Initial email from me:

From: Randall C. Kennedy [rck@xpnet.com]

Sent: Wednesday, February 10, 2010 3:17 AM

To:

Galen_Gruman@infoworld.com

Subject: Story Idea - Browser Trends

Galen,

Here’s a story idea: A look at browser usage trends within enterprises, using the repository data to back-up our analysis/conclusions. Here are a couple of examples that we could build off of:

http://exo-blog.blogspot.com/2010/02/ies-enterprise-resiliency-one.html

http://exo-blog.blogspot.com/2009/09/ie-market-share-holding-steady-in.html

Could be a good, in depth, research-y type of article, one that pokes holes in assumptions about IE’s decline, the nature of intranet web use, etc.

Anyway, let me know if you think this is worth exploring…thanks!

RCK

2. Galen’s First Reply:

From: Galen_Gruman@infoworld.com

Sent: Wednesday, February 10, 2010 10:51 AM

To: rck@xpnet.com

Subject: Re: Story Idea - Browser Trends

Hey Randy.

You covered the IE aspect last fall in your IW blog, and I saw that CW did a story along these lines based on your exo blog. So I'm not sure there's a further story with that theme.

I'd be more interested in what it takes for IT to move off of IE6. MS is encouraging them to do so, and Google and others are now saying they're dropping IE6 support. Of course, reworking

IE6-dependent internal apps means spending time and money, so IT has an incentive to not do anything for as long as it can avid doing so. Unless there's a forced requirement to shift (I can't imagine companies saying users MUST use IE6 for legacy stuff and Firefox for Google and other providers' services, and I don't believe you can run or even install multiple versions of IE at the same time in Windows). Or maybe the shift away from IE6 is not as hard as IT may fear. What does it take? What could force IT to pull the trigger?

FYI I've asked Paul Krill to come up with a regular news story based on the exo data, much like how Gregg Keizer does the regular stories based on NetApplications data. It makes sense for IW to do the market monitoring stories from a business-oriented data pool, and that would also create news stories that are syndicated that highlight the exo system. I'm not sure if Paul should or can do something as predictable as Gregg always (he always does a Web browser share story and an OS share story), so thoughts welcome on that. Of course, your data is available all the time, so monthly is arbitrary; NetApp releases the data monthly, giving Gregg a predictable but inflexible schedule.

--

Galen Gruman

Executive Editor, InfoWorld

galen_gruman@infoworld.com

(415) 978-3204

501 Second St., San Francisco, CA 94107

3. My Reply to Galen:

From: Randall C. Kennedy [rck@xpnet.com]

Sent: Wednesday, February 10, 2010 2:44 PM

To: 'Galen_Gruman@infoworld.com'

Subject: RE: Story Idea - Browser Trends

Galen,

I have no problem with Paul Krill or anyone else writing-up my usage data (which took some very clever programming, not to mention some extensive data shaping/massaging, to generate). However, I’d appreciate it if he could follow

Gregg’s lead and reference/link to the exo.blog and xpnet.com as the source of the data (i.e “Researcher’s from our affiliate, the exo.performance.network, have discovered…etc.”). This will help me to further establish xpnet.com as a distinct research brand and to begin to create a parallel distribution mechanism for my original content – one I can monetize through sponsorships, etc. It’s important that I raise the public profile of xpnet.com, and that means currying the attention of every beat writer, pundit or analyst I can find, both inside and outside of IDG. So if Gregg, or Paul – or even Ed Bott or Ina Fried – want to quote my stuff, I’m thrilled.

Fortunately, with InfoWorld mired in a “race to the bottom” (as Doug calls it) of the tabloid journalism barrel, there really should be little overlap between the kind of hard, data-driven research I’ll be publishing through my own blog and the “push their buttons again” format that seems to best fit InfoWorld’s mad grab for page views (quality be damned). Call it synergy. :)

RCK

4. Galen’s Second Reply:

From: Galen_Gruman@infoworld.com

Sent: Wednesday, February 10, 2010 11:13 PM

To: rck@xpnet.com

Subject: Re: Story Idea - Browser Trends

That was the idea.

And I take exception to the "race to the bottom" comment. Doug of all people should know that's not true. Otherwise, there would be no Test Center, never mind the massive investment it takes relative to its page views. That's a quality play. And even our populist features are good quality, not throw-away commentary.

You can push buttons based on real issues and concerns -- your Windows 7 efforts in 2008-09 showed that -- not on Glenn Beck-style crap.

If we wanted to go the "race to the bottom" approach, believe me, there'd be no Test Center, no features, and no blogs like yours, mine, McAllister's, Prigge's, Venezia's, Bruzzese's, Grimes', Heller's, Samson's, Rodrigues's, Lewis's, Marshall's, Linthicum's, Tynan-Wood's, Knorr's, Babb's, or Snyder's -- oh, wait, that's 90% of what we publish. Most of the other 10% --

Cringely and Anonymous -- are populist but hardly low-brow. That leaves the weekend slideshows from our sister pubs in the "race to the bottom" category.

--

Galen Gruman

Executive Editor, InfoWorld.com

galen_gruman@infoworld.com

(415) 978-3204

501 Second St., San Francisco, CA 94107

So far, I’ve demonstrated that Galen Gruman was intimately aware of the nature and structure of Devil Mountain Software, Inc., the exo.blog and my relationship to both. However, he wasn’t alone. Editor in Chief Eric Knorr was equally familiar with DMS and all aspects of my research publishing activities outside the IDG fold. Which is why, when he heard of the controversy over the infamous Windows 7 “memory hog” article at CW – an article that quoted Craig Barth extensively and made absolutely zero mention of Randall C. Kennedy – his first reaction was to send me the following email:

From: eric_knorr@infoworld.com

Sent: Friday, February 19, 2010 11:43 AM

To: randall_kennedy@infoworld.com; Galen_Gruman@infoworld.com;

Doug_Dineley@infoworld.com

Subject: seen this?

http://blogs.zdnet.com/hardware/?p=7389%20&tag=content;wrapper

Is it war?

All of which begs the question: If Eric was as ignorant of the Craig Barth ruse as he claims to be, why would his first reaction to reading a story about some controversy involving Craig Barth and the exo.performance.network be to contact me?

The answer is simple: Because he knew. Just as Galen Gruman knew. They both were complicit in perpetuating the myth of the Craig Barth persona. And since they represent two-thirds of the senior editorial brain trust at InfoWorld (Doug Dineley was never clued in), this means that, for all intents and purposes, the publication known as InfoWorld was directly supporting my efforts to obscure my relationship to the exo.blog - the very same exo.blog they both keep commenting on in the above email exchanges - by allowing me to put forth a fictitious character as the CTO of DMS.

And here, to address the inevitable conspiracy theorist challenges to the validity of the above email record, is the header info for this particular message. The message was sent as a response to me, Randall C. Kennedy (rck@xpnet.com) from Galen Gruman (Galen_Gruman@infoworld.com) on February 10th, 2010.

Return-Path: <Galen_Gruman@infoworld.com>

Delivered-To: rck@1878842.2041975

Received: (qmail 21591 invoked by uid 78); 10 Feb 2010 18:12:51 -0000

Received: from unknown (HELO cloudmark1) (10.49.16.95)

by 0 with SMTP; 10 Feb 2010 18:12:51 -0000

Return-Path: <Galen_Gruman@infoworld.com>

Received: from [66.186.113.124] ([66.186.113.124:49966] helo=usmaedg01.idgone.int)

by cm-mr20 (envelope-from <Galen_Gruman@infoworld.com>)

(ecelerity 2.2.2.41 r(31179/31189)) with ESMTP

id A4/73-22117-327F27B4; Wed, 10 Feb 2010 13:12:51 -0500

Received: from usmahub02.idgone.int (172.25.1.24) by usmaedg01.idgone.int

(172.16.10.124) with Microsoft SMTP Server (TLS) id 8.1.393.1; Wed, 10 Feb

2010 13:11:52 -0500

Received: from USMACCR01.idgone.int ([172.25.1.25]) by usmahub02.idgone.int

([172.25.1.24]) with mapi; Wed, 10 Feb 2010 13:11:57 -0500

From: <Galen_Gruman@infoworld.com>

To: <rck@xpnet.com>

Date: Wed, 10 Feb 2010 13:12:45 -0500

Subject: Re: Story Idea - Browser Trends

Thread-Topic: Story Idea - Browser Trends

Thread-Index: AQH7Zdt+Sb1cTPao5c3kdPVIphPlggLM2MUMAeHPlZWROLhPpw==

Message-ID: <C798371E.20D5A%galen_gruman@infoworld.com>

In-Reply-To: <003e01caaa35$a0b97de0$e22c79a0$@xpnet.com>

Accept-Language: en-US

Content-Language: en-US

X-MS-Has-Attach:

X-MS-TNEF-Correlator:

user-agent: Microsoft-Entourage/13.3.0.091002

acceptlanguage: en-US

Content-Type: multipart/alternative;

boundary="_000_C798371E20D5Agalengrumaninfoworldcom_"

MIME-Version: 1.0

Enjoy the carnage!

RCK

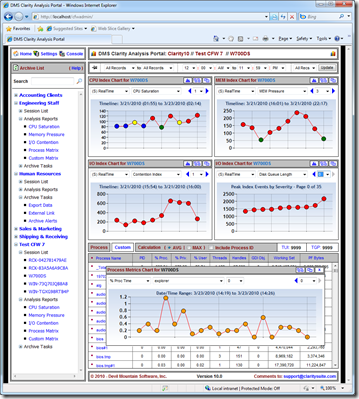

Figure 1 – Get This and Similar Charts at www.xpnet.com

Figure 1 – OfficeBench 7 (

Figure 1 – OfficeBench 7 (